Understanding Muon Optimizer from the Perspective of Explore-Exploit Trade-Off

Intuitive Understanding

First, the author believes that Muon has achieved its current effectiveness because it captures some fundamental characteristics of model training that go beyond what AdamW provides. So what is this characteristic? The author offers the following hypothesis.

Adam and SGD-based optimizers typically treat the parameter gradients as vectors and apply smoothing filters that incorporate historical gradients. These filters don’t drastically change the direction of the gradient vector—they mostly adaptively adjust step sizes and stabilize the optimization direction.

Muon’s key difference lies in how it treats 2D weight matrices: it uses 2D gradient matrices and significantly alters their directional vectors after applying a moving average filter. This alteration is achieved through an approximate SVD decomposition (using the Newton-Schulz iteration), which scales all singular values that contribute positively to the gradient direction to 1. This means Muon updates all effective singular vector directions with same priority. The authors of Muon (Keller Jordan) speculate that this is effective because it amplifies directions associated with small but important singular values.

From another perspective, this can be seen as a more rational way to explore on a rugged loss surface by incorporating matrix transformation information, while traditional vector-based methods like Adam and SGD mainly focus on exploiting the gradient direction with some smoothing. As models and optimization spaces grow larger, such methods are more likely to get trapped in local optima and miss important features early in training. Why is this form of exploration viable? Because even if an update overshoots in some directions, the subsequent gradients will naturally correct it. In this sense, gradient computation is already a strong enough form of exploitation, so introducing some exploration won’t harm the overall convergence—and may even improve it.

Moreover, overly aggressive exploitation by Adam-like methods on rugged loss landscapes may prevent convergence to robust feature combinations. The Muon paper also notes that the gradient matrices of transformers tend to have large condition numbers but low rank, meaning updates are concentrated in a few large singular value directions—an indication of over-exploitation. Therefore, Muon’s optimization can be seen as an improvement of the overall training dynamics, striking a better balance between exploration and exploitation, allowing the model to discover more robust feature combinations by trying multiple directions early, instead of immediately descending along a few maximal gradient paths.

Key Takeaways

- Muon utilizes the orthogonal basis directions and singular value information of the matrix-as-linear-transformation perspective.

- It normalizes singular values—thus avoiding extreme exploitation and instead encouraging structured exploration along effective gradient directions identified by the SVD.

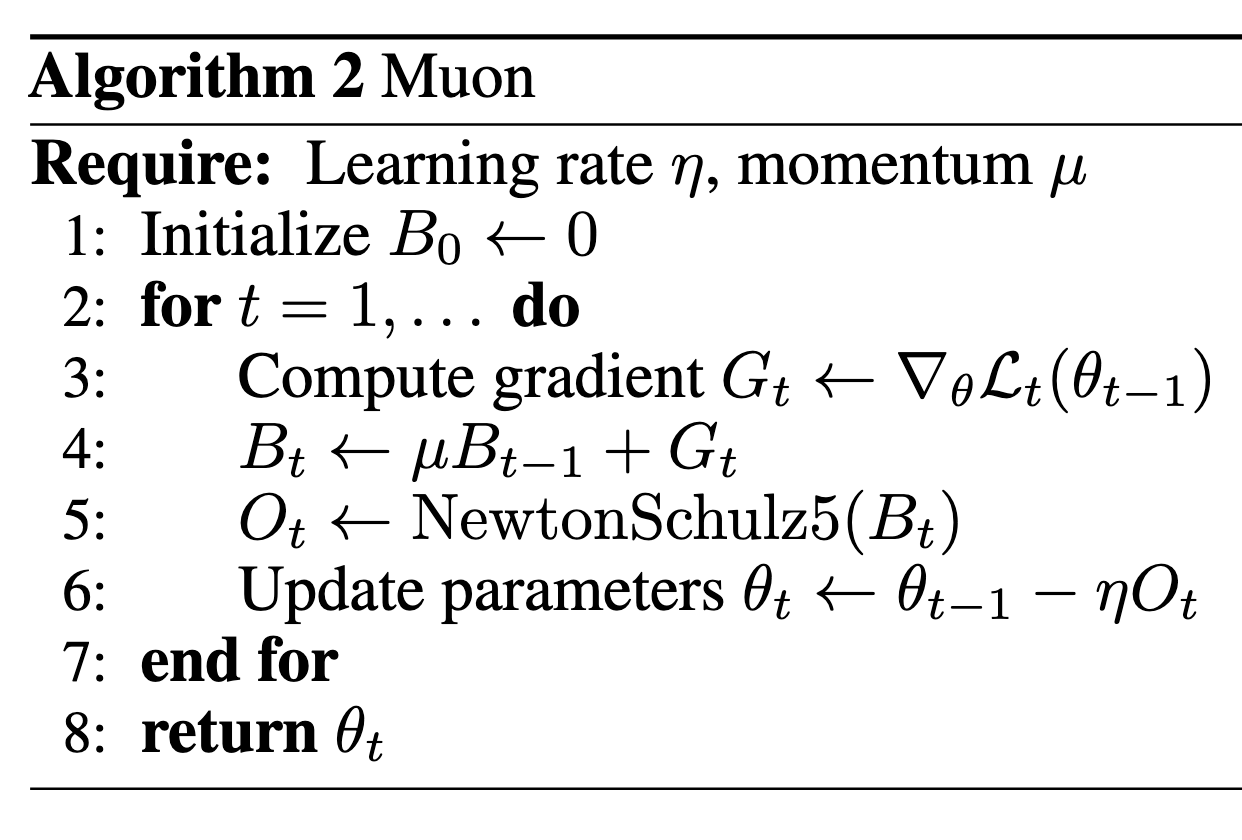

Muon’s Specific Computational Process

- $G_t$: Gradient at time step $t$

- $B_t$: Smoothed gradient momentum

NewtonSchulz5(): Uses Newton-Schulz iteration to approximate a normalized positive semi-definite matrix from the gradient.

Assuming the singular value decomposition (SVD) of $B_t \in \mathbb{R}^{m \times n}$ is:

\[B_t = U \Sigma V^\top\]Where:

- $U \in \mathbb{R}^{m \times m}$: Orthogonal matrix of left singular vectors

- $\Sigma \in \mathbb{R}^{m \times n}$: Diagonal matrix with non-negative real singular values

- $V \in \mathbb{R}^{n \times n}$: Orthogonal matrix of right singular vectors

Then, $O_t$ is approximately:

\[O_t \approx \sum_{i=1}^{r} u_i\, v_i^\top\]Where $r$ is the rank of the matrix, and $u_i, v_i$ are the singular vectors corresponding to the $i$-th singular value $\sigma_i$, i.e., the $i$-th columns of $U$ and $V$, respectively.

Phenomenon Interpretation

Su Jianlin’s article notes that Muon pretraining must be paired with Muon-based finetuning to achieve the best results. Mixing with Adam-like methods doesn’t work well.

This is because Muon and Adam-like optimizers tend to converge in different feature spaces. Mixing the two causes divergence in optimization direction, which leads to suboptimal results.

Both Muon’s authors (Keller Jordan) and Su’s article mention that QKV projections should be optimized separately.

These layers perform different feature transformations in different spaces, and each needs its own SVD transformation.

The Muon blog also states that when training transformers, embedding layers and the final classifier head layers should still use AdamW.

This might be because those layers aren’t strictly performing feature transformations, or because the classifier layer is more prone to over-exploring in a way that can’t be easily recovered by exploitation.

Su Jianlin also mentions that models trained with Muon tend to have larger singular values in their matrices.

This is because Muon’s exploration process increases the gain of directions with small singular values, thereby raising more singular values overall compared to Adam. This also means that QK logits are more likely to explode.

What’s Next

Is there a better explore-exploit trade-off point, or a better method of exploration? Currently, Muon may represent a well-balanced approach—its exploration is done by using gradients to “recall” effective singular directions, while normalizing singular values also acts as a form of filtering, increasing robustness.

Reducing exploration is easy, but can we safely increase exploration further? For example, could we add noise to the normalized singular values?